Expert Tech Insights: Unleashing the Power of Windows, Microsoft 365, and Microsoft Azure

-

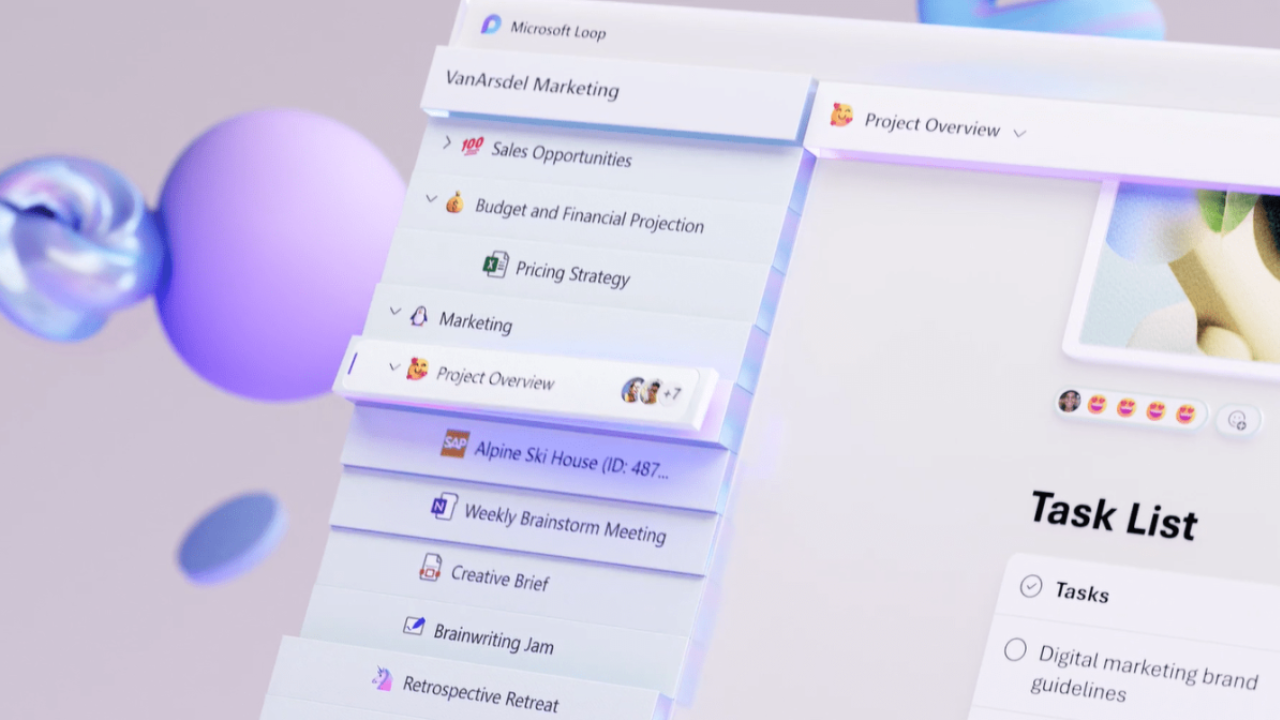

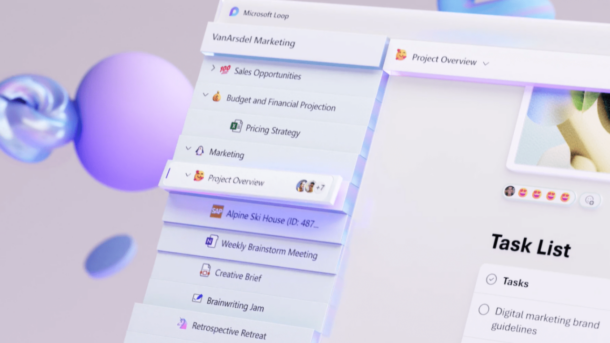

Microsoft’s NEW Loop Feature to Challenge Notion

Podcast- Apr 19, 2024

-

-

Securing Enterprise Devices: Embracing Zero Trust Security

Last Update: Apr 17, 2024

- Sep 05, 2023

-

-

How to Properly Secure and Govern Microsoft Entra ID Apps

Last Update: Apr 17, 2024

- Oct 19, 2023

-

-

Revealed: The Cost of Staying Secure on Windows 10

PodcastLast Update: Apr 16, 2024

- Apr 12, 2024

-

-

Microsoft Entra ID App Registration and Enterprise App Security Explained

Last Update: Apr 17, 2024

- Oct 19, 2023

-

-

5 Reasons to Consolidate Active Directory Domains and Forests

Last Update: Apr 16, 2024

- Feb 14, 2024

-

-

Securing Enterprise Devices: Embracing Zero Trust Security

The excessive use of digital devices in enterprises and their exposure to various networks have increased the probability of cyber-attacks. Enterprise-owned devices contain confidential data that hackers can easily access if devices are not controlled efficiently, and that can cause damage to the values and reputation of the organizations. Thus, data security is now of…

Last Update: Apr 17, 2024

Sep 5, 2023 -

Regulatory Compliance with Microsoft 365

Making sure your business is in compliance with the various regulatory policies that you need to work with can be challenging. Many companies use Microsoft 365 to work with unstructured personal data that are covered by laws that require your organization to follow different compliance procedures. This includes responding to regulatory requirements, assessing compliance risks,…

Last Update: Apr 17, 2024

Jan 25, 2022 -

Real World SmartDeploy with Barry Weiss of the Gordon and Betty Moore Foundation

After struggling for years with tools like Microsoft Deployment Toolkit (MDT), Barry Weiss heard about a simpler and less expensive solution called SmartDeploy at a Microsoft conference.

Last Update: Apr 17, 2024

Jan 20, 2022 -

Ransomware Risks for Microsoft 365

With the rise in remote workers the risk of ransomware is higher than it has ever been before. By now most people know that ransomware is a type of malware extortion scheme that typically encrypts files and folders preventing access to critical data or sometimes it can also be used to steal sensitive data. After…

Last Update: Apr 17, 2024

Oct 26, 2021 -

Protecting the Different Types of Microsoft 365 Data

Microsoft 365 is an indispensable collection of tools for businesses. While Microsoft is responsible for the availability and ongoing functionality of all the Microsoft 365 apps, the responsibility for protecting Microsoft 365 data is the customer’s obligation. Let’s take a closer look at the different types of Microsoft 365 data and the kind of protection…

Last Update: Apr 17, 2024

Nov 29, 2021 -

Protecting Microsoft 365 from Ransomware Attacks with Filewall

FileWall for Exchange is designed to work using Microsoft’s Graph API and integrates directly with the service

Last Update: Apr 17, 2024

Feb 24, 2021

Thank you to our petri.com site sponsors

Our sponsor help us keep our knowledge base free.

Petri Experts Spotlight

-

-

Laurent Giret Editorial Manager

- First Ring Daily: Did Copilot Do That? Apr 19, 2024

- First Ring Daily: Intel Does AI Apr 12, 2024

-

Michael Reinders Petri Contributor

-

-

Michael Otey Petri Contributor

- Install and Use SQL Server Report Builder Apr 10, 2024

- SQL Server Essentials: What Is a Relational Database? Mar 12, 2024

-

Stephen Rose Chief Technology Strategist

- UnplugIT Episode 2 – In The Loop Jun 13, 2023

- Unplugging What’s Next for Teams 2.0 May 30, 2023

-

Sukesh Mudrakola Petri Contributor

-

Shane Young Petri Contributor

-

-

-

Flo Fox Petri Contributor

-

Sagar Petri Contributor

- How to Create a Dockerfile Step by Step Nov 14, 2023

- What is Amazon Kinesis Data Firehose? Jul 14, 2023

-

Chester Avey Petri Contributor

-

-

-

Sander Berkouwer Petri Contributor

-

Bill Kindle Petri Contributor

-

Wim Matthyssen Petri Contributor

-

Sponsored Articles List

-

Securing Enterprise Devices: Embracing Zero Trust Security

The excessive use of digital devices in enterprises and their exposure to various networks have increased the probability of cyber-attacks. Enterprise-owned devices contain confidential data that hackers can easily access if devices are not controlled efficiently, and that can cause damage to the values and reputation of the organizations. Thus, data security is now of…

Last Update: Apr 17, 2024

Sep 5, 2023 -

Compliance

Microsoft 365

SharePoint Online

Regulatory Compliance with Microsoft 365

Making sure your business is in compliance with the various regulatory policies that you need to work with can be challenging. Many companies use Microsoft 365 to work with unstructured personal data that are covered by laws that require your organization to follow different compliance procedures. This includes responding to regulatory requirements, assessing compliance risks,…

Last Update: Apr 17, 2024

Jan 25, 2022 -

Endpoint Protection

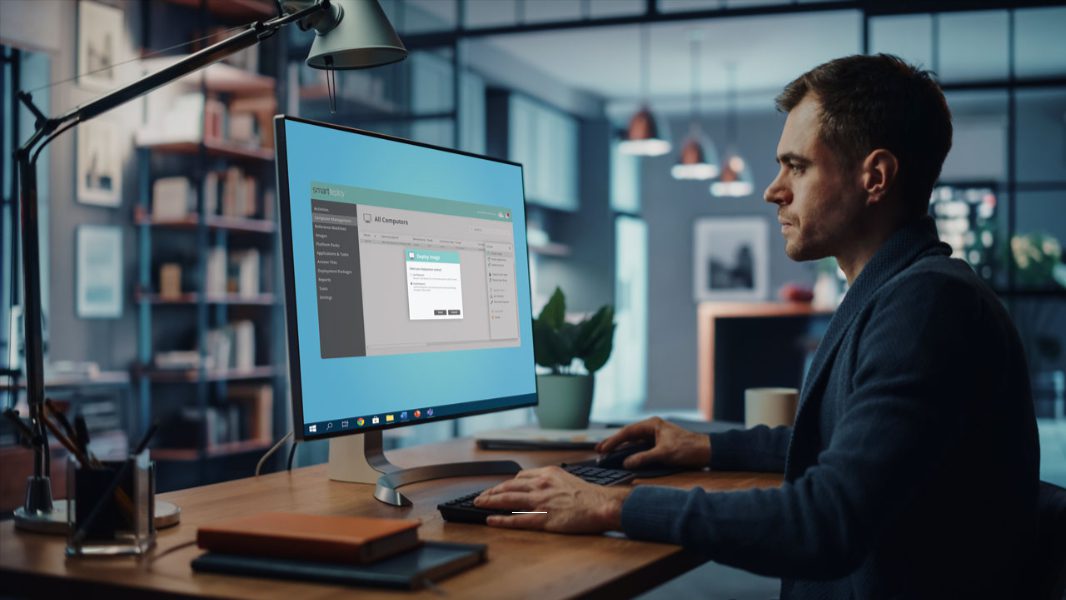

Real World SmartDeploy with Barry Weiss of the Gordon and Betty Moore Foundation

After struggling for years with tools like Microsoft Deployment Toolkit (MDT), Barry Weiss heard about a simpler and less expensive solution called SmartDeploy at a Microsoft conference.

Last Update: Apr 17, 2024

Jan 20, 2022 -

Microsoft 365

Ransomware

Ransomware Risks for Microsoft 365

With the rise in remote workers the risk of ransomware is higher than it has ever been before. By now most people know that ransomware is a type of malware extortion scheme that typically encrypts files and folders preventing access to critical data or sometimes it can also be used to steal sensitive data. After…

Last Update: Apr 17, 2024

Oct 26, 2021 -

Backup & Storage

Cloud Computing

Microsoft 365

Microsoft Azure

Microsoft Teams

Protecting the Different Types of Microsoft 365 Data

Microsoft 365 is an indispensable collection of tools for businesses. While Microsoft is responsible for the availability and ongoing functionality of all the Microsoft 365 apps, the responsibility for protecting Microsoft 365 data is the customer’s obligation. Let’s take a closer look at the different types of Microsoft 365 data and the kind of protection…

Last Update: Apr 17, 2024

Nov 29, 2021

PODCASTS

View all Podcasts-

podcast

Microsoft’s NEW Loop Feature to Challenge Notion

Microsoft Loop is the next best thing since… well productivity and note-taking app ‘Notion’. This Week in IT, I look at how Microsoft is planning to challenge Notion and how current features are building towards a big update that customers have requested from Microsoft. Links and resources Transcript Microsoft Loop is the next best thing…

Listen now LISTEN & SUBSCRIBE ON: -

podcast

Revealed: The Cost of Staying Secure on Windows 10

This Week in IT, I look at the recently announced pricing for ESUs on Windows 10 if you want to stay secure beyond the end of support date in October 2025. Plus, the different ways you can get the updates and what it means for your organization. Links and resources Transcript This Week in IT,…

Listen now LISTEN & SUBSCRIBE ON:

OUR SPONSORS

-

Cayosoft

Learn more about CayosoftCayosoft delivers the only unified solution enabling organizations to securely manage, continuously monitor for threats or suspect changes, and instantly recover their Microsoft platforms.

-

ManageEngine

Learn more about ManageEngineMonitor, manage, and secure your IT infrastructure with enterprise-grade solutions built from the ground up.